Apples, Oranges and User Stories

Read this on medium.com via ideas.riverglide.com.

Imagine you’d never seen an orange or an apple before. Then, imagine you read a few articles showing an image of a shiny, red fruit, saying – “This is an orange, it will help you with your vitamin C deficiency”. It’s a similar shape to the foods you’ve eaten for other vitamin deficiencies so still fits your model. You proceed to eat lots of this red, shiny, crunchy, refreshing fruit and you teach your colleagues the same. Now they’re eating “oranges”…

“You keep using that word, I do not think it means what you think it means.”

After some time, your vitamin C deficiency still hasn’t improved. So everyone says “down with oranges, they don’t work”—including the some of the authors who’s articles you’d read. The problem was that you were eating apples, not oranges. But it’s too late, you, your colleagues, some authors of the articles you read—now have a disdain for anything labelled ‘oranges’.

This sounds ridiculous, but only because you know the difference between oranges and apples. Yet, it reflects the same kind of wide-spread misconception that has fuelled the growing disdain I’ve seen towards the Connextra Template—As a…I want to…So that…

Summary (TL;DR):

- Some believe User Stories are analogous to product features & that they should state business benefit – this is a fundamental misunderstanding;

- User Stories are about what the user will be able to do/achieve (not actions or features they’ll do it with);

- The Connextra template (As a / I want to / So that) helps us start valuable conversations about: who the user is, what they want to do and why;

- Some tried to ‘improve’ the Connextra template to capture features and business benefit (software requirements). These ‘improvements’ spread far and wide, propagating the ‘feature’ misconception of user stories;

- It’s not too late to make the paradigm shift away from writing ‘software requirements’ to truly understand user stories as ‘the story the user wants to be able to tell’;

- Truly understand the User Story paradigm and you realise that the Connextra template is as useful as ever.

The root of the misconceptions

Think of a User Story as a short-story that a user will be able to tell about what they want to do and why they want to do it. It’s not the feature the product will have or the actions they’ll perform, it’s what the user will be able to do – as in achieve. One story may actually mean subtle or significant changes to multiple features, involving several actions.

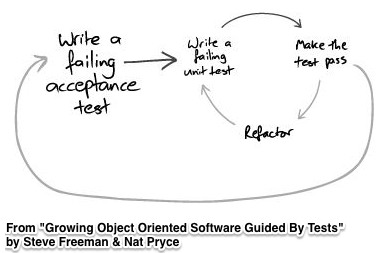

“Stories aren’t a different way to write requirements, they’re a different way to work” -Jeff Patton

User stories are a paradigm shift, a different way of working. Jeff Patton explains this superbly in slides 1-50 of his User Story Mapping slides (I highly recommend you flip through them). Jeff highlights that “Stories aren’t a different way to write requirements, they’re a different way to work”.

The misunderstanding that User Stories are just a different way to write old-style software requirements has contributed to continuous deterioration in how the Connextra template is communicated across the industry—changing User Stories into product features (or user action within a feature). Product features and actions within them generally describe the product (not the problem)… again, like old-school requirements.

“A further problem with requirements lists is that the items on the lists describe the behavior of the software, not the behavior or goals of the user” –Mike Cohn, User Stories Applied (2004) Addison Wesley

Unfortunately, the problem wasn’t with the Connextra template, it was with widespread misinterpretation of it. This misinterpretation propagated so far and wide, that many teams now experience the problems caused by slicing work into product features (problems that User Stories are designed to solve). And now people are saying—“User Stories are broken!” and “the Connextra Story Template doesn’t work”. Clearly, comparing apples and oranges, much like in the opening story.

How did this happen?

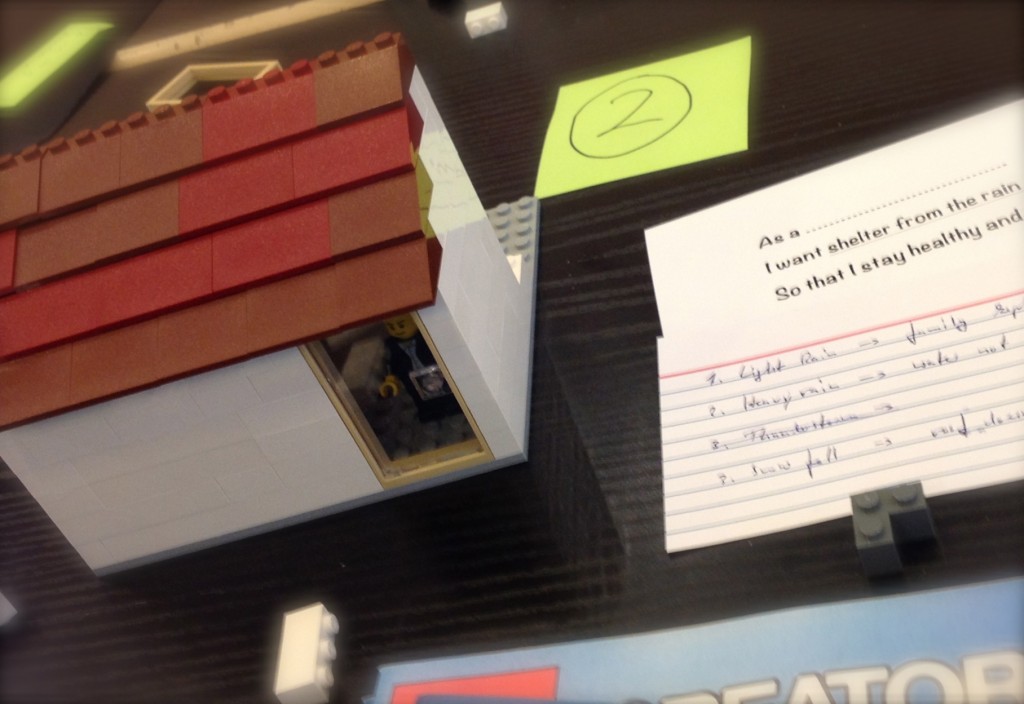

The Connextra template highlights the who, the what and the why from the user’s perspective:

As a <someone>

I want to <do something>

So that <some result or benefit>

This helps us start the right conversation; exploring the short story a user could tell about something they’ll be able to do – hence the term ‘User Story’. The “As a…” helps us start empathising with the user. The “I want to…” gets us thinking about what they want to do (not how). The “So that…” get us closer to their motivation – why they’d want to do this.

Unfortunately, many people mistook User Stories to be just a different way to write requirements—which they’d always expressed as features & functionality. In their efforts to help colleagues and peers understand user stories in the same way, several people began sharing this template:

As a [role]

I want [feature]

So that [benefit]

Many teams found that whole features were too big to implement in a short enough window, so we also saw a variation on this where the interactions within the feature were described:

As a [role]

I want to [action]

So that [outcome]

Others sticking with the larger feature model, asked about ‘benefit’ part – what’s that for? Is it the benefit to the user? If it’s about the user, then where do we ‘document’ the business benefit? The question of ‘value’ and ‘business benefit’ was seen to be missing so we started to see:

As a [role]

I want [feature]

So that [business benefit]

People didn’t always know what the specific business benefit of a feature was so they started writing “As a… I want ” and left the “So that…” part out. So, a different format was put forward to encourage thinking about the business benefit and we ended up with a catch-all for feature or action—“functionality”—plus the business benefit as the opener (which became a confusion between what the business wanted and what the user wanted):

In order to <achieve some value>

as a <type of user>

I want <some functionality>

Whether it was the intent or not, I believe that these variations made it easier for teams to think they were working with User Stories when in fact they were simply capturing product-features and business-benefits like old-style software “requirements” – only dressed in a User Story like template.

The opportunity

User stories are about the user – hence the name. It’s a way of working, not a way of writing requirements. This is an area we all need to get better at explaining.

What we write on the User Story card (or electronic equivalent) using the original Connextra template helps us start the right conversation about users’ needs, slicing by value to the user. Starting the right conversation to slice by business value is a different problem.

The original Connextra template works just fine for User Stories that tell a story about the user. The template wasn’t trying to fix the mindset of slicing-by-feature. Books like User Stories Applied were there for that. The misconceptions began when people assimilated User Stories through their feature-biased filters and tried to ‘fix’ it – rather than question their own understanding.

This left many teams with “features dressed as stories” caused by a fundamental, yet common, misunderstanding. Who knows, I may have once shared in that misunderstanding myself. Now, we have an opportunity to change this by learning more truths about User Stories so that we can all benefit from increased agility.

Have you been working with features dressed as stories? If so, and you want that to change, here’s a place to start.